I’ve written this essay for the AI Worldview Prize, a competition held by FTX Future Fund in which they ask for essays that might change their view on Artificial General Intelligence (AGI) and its risks, which they take very seriously. As I’ve stated before, I still think the prize money is way too big for this. But, as long as it is this big, I will submit an essay, as I could use the money for my pharmaceutical endeavors, or, at the lower end, to pay my rent.

There are a couple different ways to win money in this essay contest. The ones that pertain to this essay are as follows:

1. $1.5 m for changing their probability of “AGI will be developed by January 1, 2043” from 20% to below 3%

2. $200k for publishing the canonical critique on whether AGI will be developed by January 1, 2043, whether or not it changes their probability estimates significantly

3. $50k to the three published analyses that most inform the Future Fund’s overall perspective on these issues, and $15k for the next 3-10 most promising contributions to the prize competition

I don’t expect to win any of these prizes, mainly because the Future Fund’s way of thinking about these issues is alien to me. I don’t really understand how you can assign an exact numeric probability to the probability of AGI being developed by 2043, given how many assumptions go into whatever model you’re using to estimate this. And, given the uncertainties involved, I really don’t understand what information would change your probability estimates from 20% to 2%.

I mean, I used to study geosciences, and there were scores of papers arguing about how one would estimate the probability of, say, 1.5 degrees Celsius warming by the year 2050. To be clear, these weren’t even arguments about the probability of 1.5 degrees of warming by 2050, although there were plenty of those. These were arguments about how you would even make that sort of argument. So, these papers would address issues like:

- What kind of assumptions should you have to state in advance?

- What are the probabilities of those assumptions, and how should those probabilities affect the ultimate probability of warming?

- What sort of models are allowed? How should improvements in the models or in the computers used to run the models be incorporated into the estimates?

- How should these models incorporate important assumptions if the assumptions don’t have a lot of data backing them up?

- How should models update with updated information?

As you can probably tell, these are really important issues to address if you want to be able to converge on common estimates of probability for future events! If these issues aren’t addressed, probability estimates won’t even be speaking the same language. And, if you ever read the International Panel on Climate Change (IPCC) report, all of these issues are covered in depth before they state their probabilities of various future events.

If the IPCC didn’t do this, and they stated their probabilities of climate change related events like the FTX Future Fund does with AGI, with zero explanation of what models they were using to estimate these probabilities, the assumptions behind those models, or the certainty of those assumptions, I’m pretty sure geoscientists would riot. Or, at the very least, they’d complain a lot and try to get people fired. If FTX Future Fund wants to turn AGI forecasting into a serious field, I would definitely advise them to take a page from the IPCC in this respect at least.

All of that being said, for the sake of this contest, I’m saying that the FTX Future Fund’s estimate of the probability of AGI should be reduced to 2%. The short version is that before AGI, there is going to be intermediate level AIs. These intermediate level AIs will have some highly publicized mishap (or, possibly, be involved in some scary military action). The public backlash and subsequent regulatory push will push back the timeline of AGI for a few decades at least.

For the long version, read on.

On March 16, 1979, the movie The China Syndrome was released, starring Jane Fonda. In the movie, Jane Fonda plays an intrepid television reporter who discovers that a nuclear power plant came perilously close to melting down through its containment structure “all the way to China” (hence the title of the movie). The power plant officials cover up this near disaster, threatening Jane Fonda and her crew. The end of the movie is left deliberately ambiguous as to whether or not the coverup succeeded 1.

2 days after the movie was released, an executive at Westinghouse, one of the major providers of nuclear power equipment and services, was quoted in the New York Times as saying the movie was a “character assassination of an entire industry”, upset that that the power plant officials were portrayed as “morally corrupt and insensitive to their responsibilities to society”. This proved to be unfortunate timing.

12 days after the movie was released, the Three Mile Island reactor in Pennsylvania experienced a partial meltdown. This was caused by mishandling of a “loss of coolant accident”, which in turn was caused by inadequate training and misleading signaling from safety detectors. Over the course of the partial meltdown, sketchy and misleading communication from the power plant officials caused state regulators to lose faith in them, calling in the Nuclear Regulatory Commission (NRC). Unfortunately, the NRC lacked authority to tell the power plant officials what to do, and so everyone bumbled along until eventually the situation was resolved. Later on, it was found out that the plant operator had been falsifying safety test results for years.

Nobody died as a result of the Three Mile Island partial meltdown. There was also no clear rise of radiation-related sickness or cancer around Three Mile Island in the years following the partial meltdown. While residents did have to temporarily evacuate, 98% of people returned within 3 weeks. Deer testing did see increased levels of cesium-137, but not to a dangerous amount.

However, the partial meltdown and subsequent scandal still proved to be a boon for anti-nuclear advocates. In May of 1979, 65,000 people attended an anti-nuclear rally in Washington DC, accompanied by then California governor Jerry Brown. In September of 1979, 200,000 people protested nuclear power in New York City, with a speech by none other than Jane Fonda. Speaking of Jane Fonda, The China Syndrome was a sleeper hit at the box office, earning $51.7 million on a budget of $5.9 million, and getting nominated for 4 Academy Awards, including Best Original Screenplay.

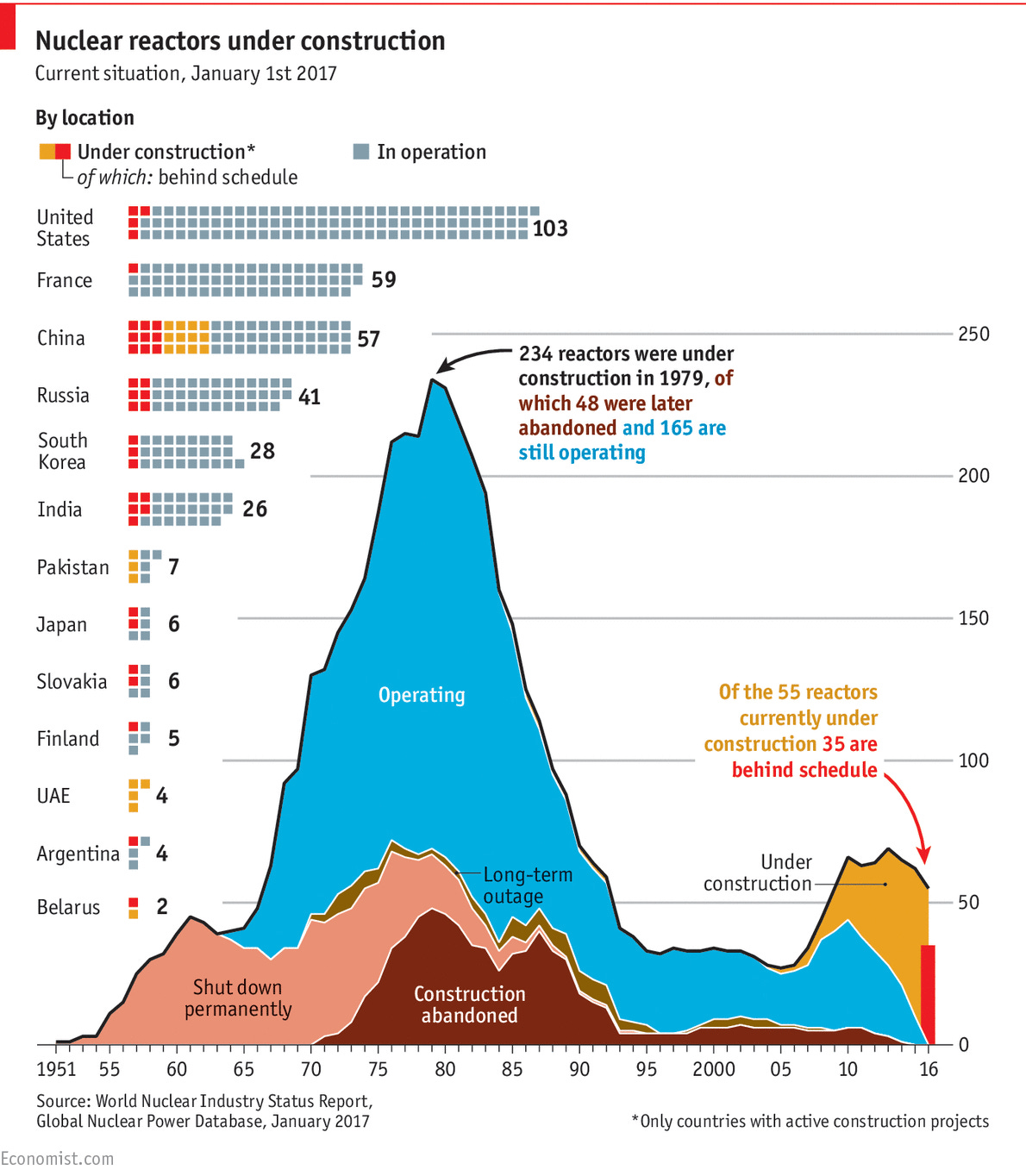

More importantly for our purposes, this incident proved to be a decisive turning point in nuclear power plants. Nuclear power plant construction reached a global peak in 1979. No new reactors were authorized to begin construction in the US between 1979 and 2012, even though electricity retail sales just about doubled during that time. Chernobyl was certainly the nail in the coffin for nuclear development, but the real decline started after the Three Mile Island incident.

That means that the commercial case for all the new types of nuclear reactors was also made much more difficult. Any investor who might be tempted to invest in, say, a new type of molten salt reactor, or any intrepid operator who might have had a bright idea for how to improve one, must have been dissuaded by the fact that their idea would never see the light of day. In other words, there must have been a nuclear winter (although of a different sort than the one usually discussed).

When that Westinghouse executive was quoted in that New York Times article, he had no idea that he was speaking at the absolute peak of his industry. How could he have? Sure, he must have been aware of the anti-nuclear sentiment among the left, but that sentiment hadn’t stopped the growth of the nuclear industry thus far. He probably figured that the results that the nuclear industry was getting spoke for themselves.

But, as we know now, that was a crucial turning point. Westinghouse and the rest of the nuclear industry surely saw themselves careening towards a Jetsons future, where everything was powered by nuclear energy. They thought the only barriers were technological, and treated the social barriers high-handedly. After all, they knew better. Instead, they were careening towards our current, carbon-heavy future, where very few things are powered by nuclear energy and a lot of things are powered by dead organisms that produce global warming as a byproduct.

I fear that the dominant intellectual discourse around the rise of Artificial General Intelligence (AGI) is making the same mistakes as that Westinghouse executive in treating an exciting, dangerous new technology as a purely technological phenomenon while ignoring social aspects of that phenomenon. In the introduction to the prize that this essay is written for, Nick Becklestead at FFX claims there’s a 20% probability of AGI arising by January 1, 2043 and a 60% probability of AGI arising by January 1, 2100. In fact, part of the prize conditions are convincing him to significantly change his estimates. However, when he explains his reasoning for these estimates, he only cites technical considerations: hardware progress, algorithmic process, deep learning progress, etc. However, if the history of nuclear power in the US teaches us anything, social barriers can easily push back any timeline by 20 years or more.

This factor has been overlooked because, up until now, AI has largely enjoyed a privileged position of being self-regulated. The only people who determine what various forms of AI can and can’t do have been, mostly, the people that work on them. The only exceptions to this in the majority of AI development 2 have been social considerations, like how Google included “AI ethicists” in their release of their text-to-image AI, Imagen, to help avoid the creation of pornography and anything that smacks of “social stereotypes”. These are not existential threats to the development of AGI, though. They’re actually incredibly easy to get around, as evidenced by the rapid creation of text-to-image pornography generators3.

However, if the general public (specifically, the protesting class) ever take AI risks as seriously as the rationalist/EA community, this will quickly change. There will be much more serious and existential barriers to the development of AI. There will be mass protests in the streets, government regulators swinging in from the trees, and padlocks placed on server rooms. Every proposed algorithmic change by OpenAI will have to go through three rounds of FCC review, each costing $500k and determined by 12 bureaucrats who all struggle with their email. AI progress will grind to a halt just as nuclear did.

Many people would stop me here and say, “But, if this happens in the West, it would just let China surge ahead with developing their own AI!” This is a mistake. If AI risks are taken seriously by Western bureaucrats, it’s probable that they would also eventually be taken seriously by Chinese bureaucrats. This can happen in two ways. First of all, if Western bureaucrats take AI risks seriously, some segment of the Chinese population will as well. They will protest in some form or another against AI. Protests in China have been occasionally effective in halting undesirable projects, like these protests against nuclear plants. This is the less likely way for AI development to be halted in China.

More likely is that Chinese bureaucrats would hear Western bureaucrats worrying about how AGI could become independent or a competing power source to the government, and take actions to stop that from happening to their own government. Historically, the Chinese government has reacted dramatically to any trend that threatens “social stability” or its control over the Chinese populace. It is very willing to sacrifice economic growth to do so. Witness its crackdown on Chinese tech giants, its harsh COVID lockdowns, or its persecution of literally any group that becomes popular (e.g. Falun Gong). If Western bureaucrats think that AI could be disruptive to the government’s functioning, Chinese bureaucrats will take that very seriously and react accordingly.

In short, if the Western chattering classes think that AI development poses a huge safety risk, they will convince politicians to place it under so many regulatory burdens that it will effectively be halted. If autocratic countries think that AI development could pose a risk to social stability or their own control, they will smother it. The AGI timeline could be extended by decades.

The real question would be how that would happen. That is, how does AI risk go from being a concern for Internet nerds to being a concern for the rest of humanity? When do we start to see Instagram posts about AI risk along with a donate link? When does Alexandria Ocasio Cortez mention AI risk in a viral tweet?

There are two main ways I see this happening. The first is if an intermediate level AI makes a heavily publicized mistake. The second is through the military.

So, the first isn’t hard to imagine. Somewhere in between where we are now and AGI, there are going to be intermediate level AIs, specialized AIs that are good at specific tasks that used to be done by humans. We’re already seeing some of that now with text models and text-to-image models. These intermediate level AIs will improve and expand in usage as they start to develop “strategic” thinking and are able to recommend or make management-type decisions.

Intermediate AIs will make big splashes as they’re introduced and take over parts of the economy that used to be human-only. If one of them goes rogue or is used for an unsavory purpose, there could be a huge crackdown on AI. Here are a few possible scenarios:

1. Someone creates a bundled AI that buys domains, fills them up with propaganda, then creates fake social media profiles to promote them. A future right wing populist is caught using one, possibly even winning an election through this tactic.

The social media platforms, domain registrars, and search engines band together to ban all such AIs. Congress demands that any providers of large enough datasets to create such an AI has to verify customers’ identities and how they’re using these datasets, with heavy criminal penalties for misuse. If someone is caught using or creating an AI that creates spam, they will get fined heavily. Anyone who has provided them with datasets that assist in this will also get fined heavily.

These “know your customer” regulations are so onerous that only academic labs and big corporations are able to overcome them, and these big academic/industrial teams are too heavily internally regulated to produce anything resembling AGI in the near future.

2. Someone creates an AI that’s a total security system for schools: cameras to detect threats, automated gates and fences to lockdown a school until the threat is eliminated. These become commonplace in schools.

During a school shooting, the AI refuses to release lockdown until the threat is neutralized. This clashes with students’ ability to evacuate. Parents demand that all AI security systems must have human-in-the-loop overrides at their most basic level, which is extended to all AI in general. It becomes impossible for AI to conceive of and execute plans independently.

3. Someone develops an AI populist: a VR avatar on video sites that packages and regurgitates conservative blog posts for views. At some point, it convinces a bunch of people that a California-area school is staffed entirely by pedophiles. They descend upon the school and riot, causing millions of dollars worth of damages and a few deaths.

It’s decided that AI speech is not constitutionally protected, and, in fact, creators of AI that generates speech can be held legally liable for damages caused by that speech. Not only do the creators of the AI populist get sued, but the creators of the speech model they use get sued, too. AI development slows immensely as everyone tries to figure out exactly what they’d be liable for.

4. A drug cartel uses AI to develop new forms of methamphetamine. This is an all-in-one solution: it models methamphetamine, sees where substitutions can be made, orders the substitutions, then tests them in an automated lab. This proves to be a really effective model, and the drug cartel floods the markets with various forms of cheap methamphetamine.

The Drug Enforcement Agency (DEA), in an effort to combat this, declares jurisdiction over all forms of AI. Anyone who sells or produces AI models has to make sure that their AI models are not being used to aid in the sale of illegal drugs. The DEA declares various forms of AI development as restricted, making the development of even routine deep learning take months of paperwork.

None of these would be death knells for AI development. All of them would make AI development more difficult, more expensive, and subject to more regulation. If new AI models become subject to enough regulation, they will become as difficult and expensive to make as a new drug. If people get really freaked out about AI models (e.g. if somehow a bunch of kids die because of an AI), they will become as difficult and expensive to develop as a new nuclear plant.

Those were examples of one category of ways I can see AI development getting caught up in social restrictions. Another way I can see is from the military.

The military has actually been worried about AI for a while. Basically, since the 70s, the military has technically had AI that’s capable of detecting threats and dealing with them autonomously through the “Aegis Combat System”, which basically links together the radar, targeting, and missile launching of a battleship. So, the question since the 70s has been to what extent they should allow the AI to operate on its own.

In the 70s, the answer was simple: there needs to be a “human in the loop”. Otherwise, Aegis would literally just fire missiles at whatever came on its radar. As AI capabilities have grown, however, this question has become more complicated. There are now drones which can obey commands like “search and destroy within this targeted area”. Supposedly, the drones ask for confirmation that their targets are properly identified and ask for confirmation to fire, which means they have three levels of human in the loop: confirming the area, confirming the target, and confirming the command to fire.

But, given that the military loves blowing up stuff, there’s always a push to make humans less in the loop4. For area confirmation, well, take Aegis. Aegis is largely meant as defensive anyways. So, as the argument goes, there’s no need to ask a human to confirm the area in which Aegis could be active. The area to look for targets is whatever area from which something could attack the battleship.

Meanwhile, for confirming the target, isn’t the whole point of AI that it’s smarter than humans? If the billion dollar AI says something is a target, and the $30k/year grunt at the other end says it’s not, why are we believing the grunt? This is especially true if it’s an AI that was trained with a bunch of soldiers already on how to identify targets. Then, we’re basically weighing the expertise of a bunch of soldiers vs. the expertise of one5.

For confirming the command to fire, that’s all well and good if time isn’t of the essence. But, if it is, that might be time we don’t have. Maybe you’re trying to defend your battleship, and a plane is coming into range right now. In 15 seconds it’ll be able to drop a bomb on your ship. Should the AI wait to confirm? Or, maybe you’re trying to assassinate a major terrorist leader, and this is the one time he’s been out in public in the last 15 years. In 30 seconds, he’ll go back to his cave. Can’t the drone just fire the missile itself?

Maybe these arguments seem convincing to you. Maybe they don’t. However, they are steadily convincing more and more military people. Already, autonomous drones have been allegedly used by Turkey to hunt down separatists. And, in the US, we’re speedrunning Armageddon as our generals make the same arguments as above about our nuclear arsenal, trying to change “human-in-the-loop” to “human-on-the-loop”.

It is very easy to imagine a high-profile military mishap involving the same level of intermediate AI mentioned previously. Hopefully, this mishap will not involve the nuclear arsenal. It’s also easy to imagine a determined adversary using intermediate AI to attack the US, like a terrorist group releasing an autonomous drone onto US soil.

Either way, AI could become a military matter. If AI is considered a military technology, it could become as difficult for a civilian to develop advances in AI as it is for a civilian to develop advances in artillery. Once AI becomes solely the province of the military and its contractors, AI could become as expensive and slow to develop as fighter jets. To give you an idea of what that entails, by the way, the F-35 fighter jet has been under development for 60 years and has cost over $1 trillion.

There are many possible inflection points for the development of AI. I don’t know if any of the specific scenarios I’ve outlined will happen. What I do know, however, is that at some point in the course of the development of AGI, a Three Mile Island type incident will happen. It will be scary and get a lot of press coverage, and a lot of talking heads who have never thought about AI before will suddenly start to have very strong opinions.

At that point, the AI alignment community will have a choice. For those in the AI alignment community who are terrified of AGI, it won’t be that hard to just add more fear to the fire. You will be able to join hands with whatever coalition of groups decides that AGI is the new thing they have to stop, and you will be able to put so much regulation on AI that AGI will be delayed for the indefinite future. After all, if there’s one thing the modern world is good at, it’s red tape.

However, for those in the AI alignment community who think AGI is promising but dangerous (like myself), this will be a tricky incident to navigate. Ideally, the outcome of the incident will be that proper guide rails get put on AI development as it proceeds apace. However, that is not guaranteed. As Westinghouse and the entire nuclear industry found out, things can get rapidly out of hand.

This summary of The China Syndrome and of the Three Mile Island incident is based off of Wikipedia, to be honest.

Not including self-driving cars and other parts of AI that directly interface with the real world. Those have had serious regulation. On the other hand, nobody thinks that’s where AGI is going to come from, probably in part due to all the regulation. This is part of the point of this essay.

I’m not linking to the porn generators here, but they are very Googleable.

I took a lot of my understanding of how the military thinks about AI from this essay about military AI. I’m linking it here because I think it’s interesting.

If this seems abstract to you, imagine that it’s been decided that white pickup trucks with a lot of nitrogen fertilizer in a certain area of the Middle East are almost certainly car bombs, as nobody in this area would be transporting that much nitrogen fertilizer for agriculture. The grunt left Ohio for the first time 3 months ago, and he thinks that it’s totally natural to truck around that much nitrogen fertilizer. The AI might correctly identify this truck as a target, and he might not.