Like everyone else on Twitter, I spent last weekend playing with ChatGPT, OpenAI’s new large language model (basically, a language based AI). And, like everyone else, I was hugely impressed by its capabilities. ChatGPT understands text-based things incredibly well.

From my own line of work (well, one of them), I gave it some tricky test problems from the GMAT, specifically the sort of problems that you’d need to answer right to get a good enough score for Harvard Business School.

Of these problems, ChatGPT did very well on difficult reading comprehension, not very well on difficult grammar, well on difficult logic, and somewhat well on difficult math. Overall, I’d say it could probably score around a 650-700 out of 800, which is not good enough for Harvard, but good enough for a top 40 business school. If you want to try for yourself on these questions, here’s a list of the hardest questions it got right in each category, and where it was also able to explain its answers correctly. The answers to these questions are in this footnote [1]:

1. Question 5 of this reading comprehension passage, question 2 of this reading comprehension passage, question 1 of this reading comprehension passage

2. This grammar question.

3. This explain or resolve logic question and this logical inference question

4. This algebra problem and this percentages question (note: it did much more poorly on geometry, as you might expect)

This is, to be honest, pretty amazing. It’s hard to remember sometimes that there’s not a person on the other end of this. I mean, include an essay, and this could literally be a business school application. Just for fun, I’ve included one at this link, along with an essay that an admissions website tells me won admission to Harvard Business School. I think you’ll be able to tell which one was ChatGPT generated, but they’re not that far apart. If you want the answer as to which is AI generated, follow the footnote [2].

Beyond just simply needing to get better, the only real weakness I see in ChatGPT is that it is very convincing in its wrong answers. I’ve been teaching the GMAT for years, and even I find it disconcerting when ChatGPT confidently tells me the wrong answer is correct, and defends its reasoning with plausible logic. It’s especially disconcerting when it strongly defends its wrong answer under questioning. I have no idea if there’s a technical solution to this, but this does seem to be a serious problem.

So, when I think of GPT’s strengths, I’d rank them in this order:

1) Reading comprehension

2) Writing

3) Logic

4) Math

5) Explainability (i.e. the ability to explain and correct its reasoning)

So, ChatGPT excels in areas where it either doesn’t matter if it’s wrong, or if it’s very easy to tell when it’s wrong. The best fit for the former are first drafts of pretty much anything as long as there’s a good editor at the end; the best fit for the latter is stuff like code, or perhaps a help desk for easily falsified instructions (like putting together IKEA furniture).

For my original business, tutoring, I actually don’t think ChatGPT is a great choice right now. It would take a disciplined student to only take the correct things that ChatGPT says and ignore the rest. Most students, after realizing ChatGPT was confidently wrong about one or two answers, would likely stop using it.

But that’s not what this essay is really about. There’s a lot of hype about using ChatGPT for drug discovery, as there always is whenever there’s a new AI advance. This essay is to put the kibosh on that, with a small caveat.

There are a few problems with trying to use ChatGPT for drug discovery. First, even if we just stick with text-based stuff, ChatGPT isn’t sophisticated enough to, say, design a phase 1 experiment. It’s really only capable of emulating the methods section of a paper, and even then it makes mistakes.

The natural response to this is to just use ChatGPT for preclinical work, like target identification. This is where I’ve seen a number of AI drug development plays end up, with some sort of language about “Analyzing millions of data points to determine targets” or “Machine learning to optimize target validation”. These are appealing in theory, but, in practice, never really advance beyond what a human can get from reading the abstract of a paper.

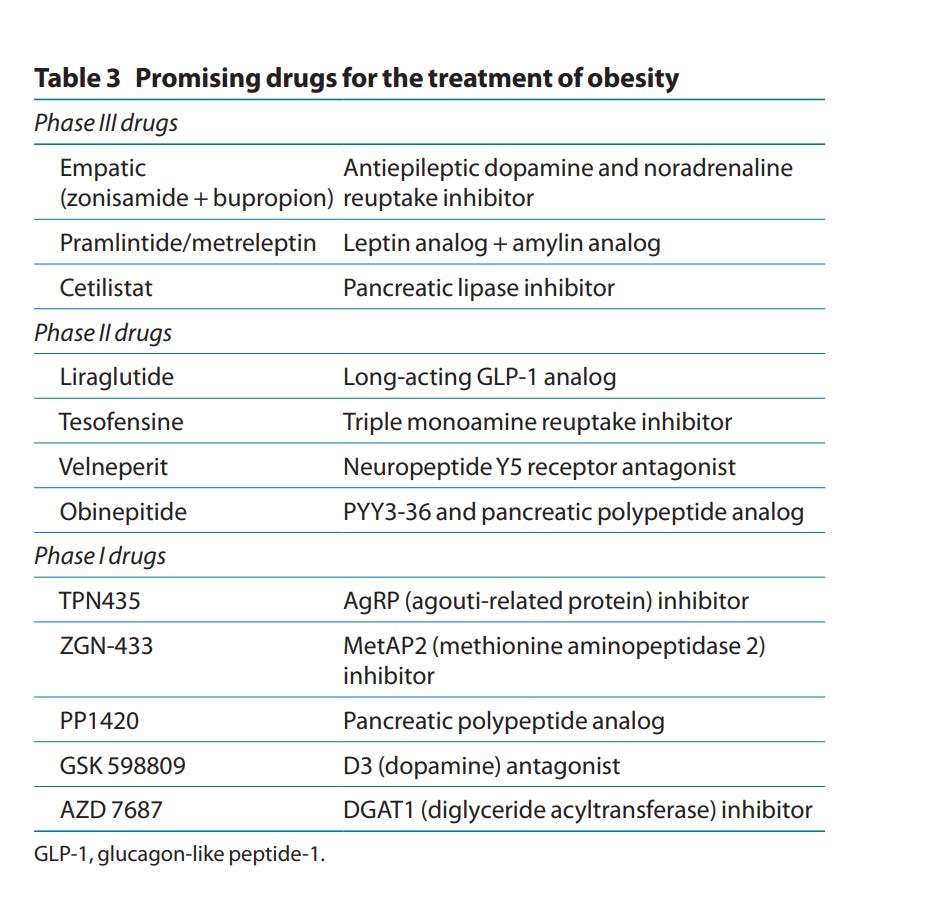

Like, I could ask ChatGPT, “What is a potential target for a drug to treat obesity?” and it answers leptin, which is fair, as that’s a hormone that affects fat deposition. But, leptin’s also in the table of this list from 2011 of promising drug targets for obesity, so ChatGPT didn’t add much there. Plus, since 2011, the GLP-1 agonists have done way, way better than leptin, so ChatGPT isn’t even reflecting the state of the art in 2021.

Other parts of drug discovery are way too important to trust to ChatGPT’s overconfidence. For example, as I discussed in my last essay, manufacturing drugs is really, really hard. There’s no way I’d ask ChatGPT for advice on manufacturing drugs because I wouldn’t be able to figure out if it was wrong until I had already invested a lot of time and money.

Same thing with, say, figuring out the minutiae of the protocol of a safety trial, or even writing a patent. Even if ChatGPT is just writing the first draft, I’d worry that I’d have the same trouble figuring out its mistakes as I do when it answers test questions, and those errors would creep into the final version.

So, by and large, I don’t expect ChatGPT to have a big effect on the actual nuts and bolts of drug discovery right now. However, in the near future, I can imagine a version of ChatGPT being trained on a specific project’s documents. In that case, I could imagine even this version of ChatGPT being very useful for communication, like summarizing a protocol or recalling a proposed change to a formulation. After all, currently I spend a lot of time looking back through old documents and emails trying to remember what exactly we had decided to do about a certain issue, and it’d be nice to just ask a bot directly.

But, that’s in the future. For right now, I think drug discovery is safe.